AI is everywhere, and executives are under pressure to deliver real results with it. But building AI-powered products isn’t about hiring the trendiest new role or throwing people at the problem — it’s about assembling the right engineering team.

In this post, we’ll walk you through the AI engineer tech stack that actually delivers value in production and how to hire for it strategically. We’ll also explain why “prompt engineer” is more of a skill than a standalone role — and how partnering with a nearshore team can give you the talent and speed you need.

Why Most AI Hiring Strategies Miss the Mark

Many companies make mistakes in their AI hiring strategies by treating it like a research project rather than a product development initiative. They hire PhD-level machine learning experts or chase flashy titles, but overlook the practical engineering roles that hold the system together.

In reality, building and managing an AI tech stack requires a team with diverse skills – data science, ML, software engineering, system integration, and more – which are hard to find. Focusing only on niche AI roles can leave out these fundamentals.

To have real-world impact, start with AI application engineering (making models actually work in your product), not just theory or “cool research”. Production-ready AI isn’t about knowing 20 fancy libraries – it’s about wielding a core set of reliable tools to solve actual problems.

The AI Engineer Tech Stack (Explained Simply)

Building an AI product involves multiple layers. Here are the key roles and skills you need, in plain terms:

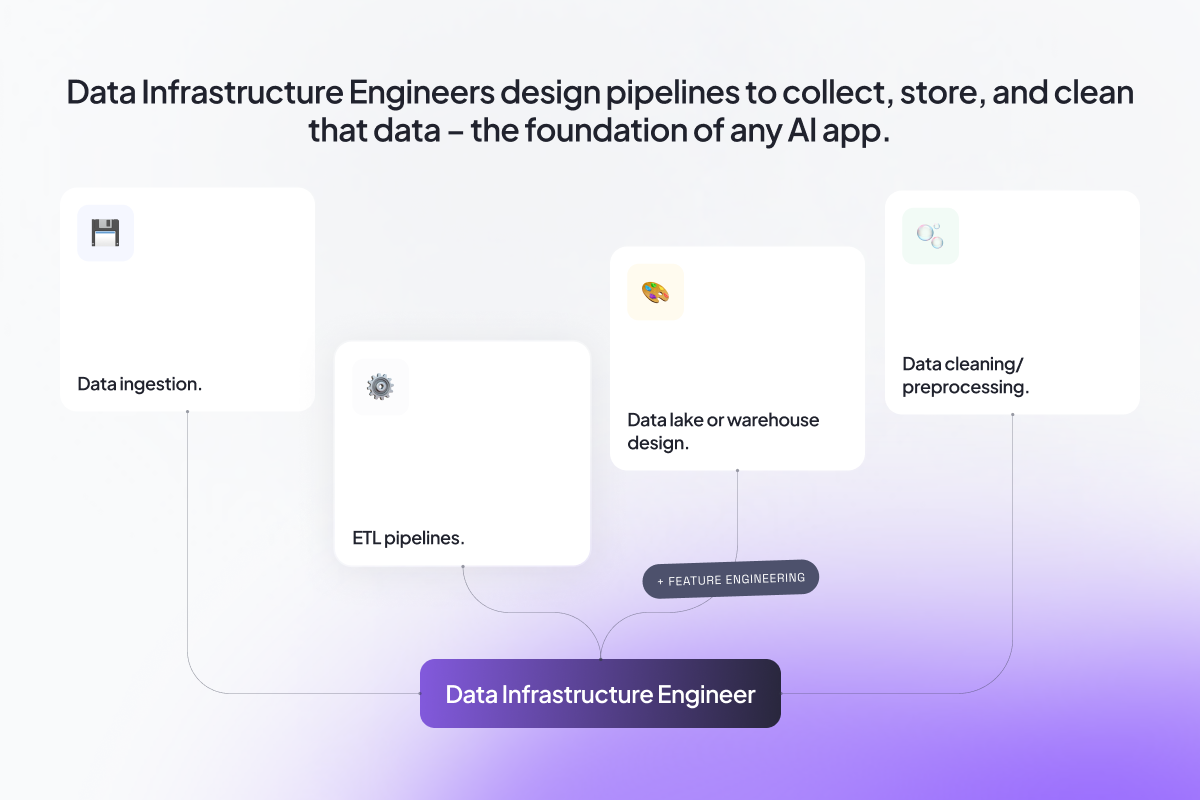

1. Data Infrastructure Engineer

Every AI project begins (and ends) with data. Data Infrastructure Engineers design pipelines to collect, store, and clean that data – the foundation of any AI app.

- They set up ingestion tools (e.g., AWS Kinesis/Glue, Azure Data Factory) to gather raw data from databases, sensors, APIs or web sources, and put it into storage systems like S3 or Azure Blob.

- They also ensure data quality: removing noise, handling missing values, normalizing and encoding features as needed.

This work might seem “invisible,” but it’s critical: clean, well-organized data allows ML models to work correctly.

Key functions: Data ingestion, ETL pipelines, data lake or warehouse design, data cleaning/preprocessing, feature engineering.

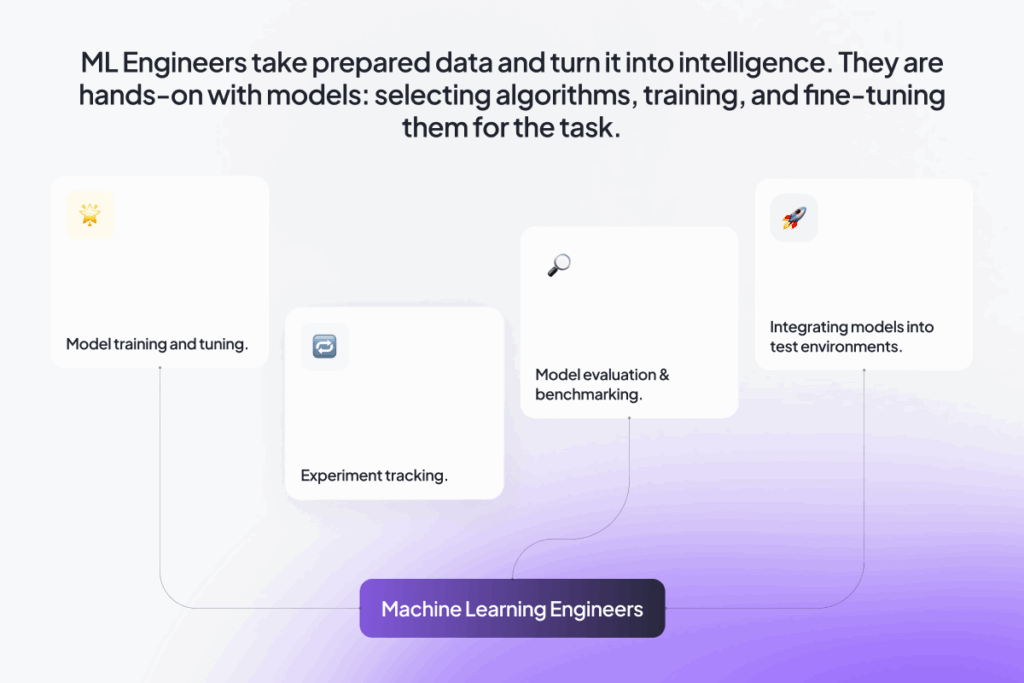

2. Machine Learning Engineer

ML Engineers take prepared data and turn it into intelligence. They are hands-on with models: selecting algorithms, training, and fine-tuning them for the task. Today, most companies use large pre-trained foundation models (GPT-4, Claude, etc.) and customize them.

ML Engineers set up experiment pipelines (tracking data, configs, metrics) and fine-tune these models on your own data (for example, using OpenAI’s fine-tuning APIs). They optimize models for performance (accuracy, speed, cost) and handle tasks like evaluation and iteration.

Key functions: Model training and tuning, experiment tracking, model evaluation and benchmarking, and integrating models into test environments.

This phase involves selecting appropriate algorithms, training the models, and fine-tuning them to achieve optimal performance.

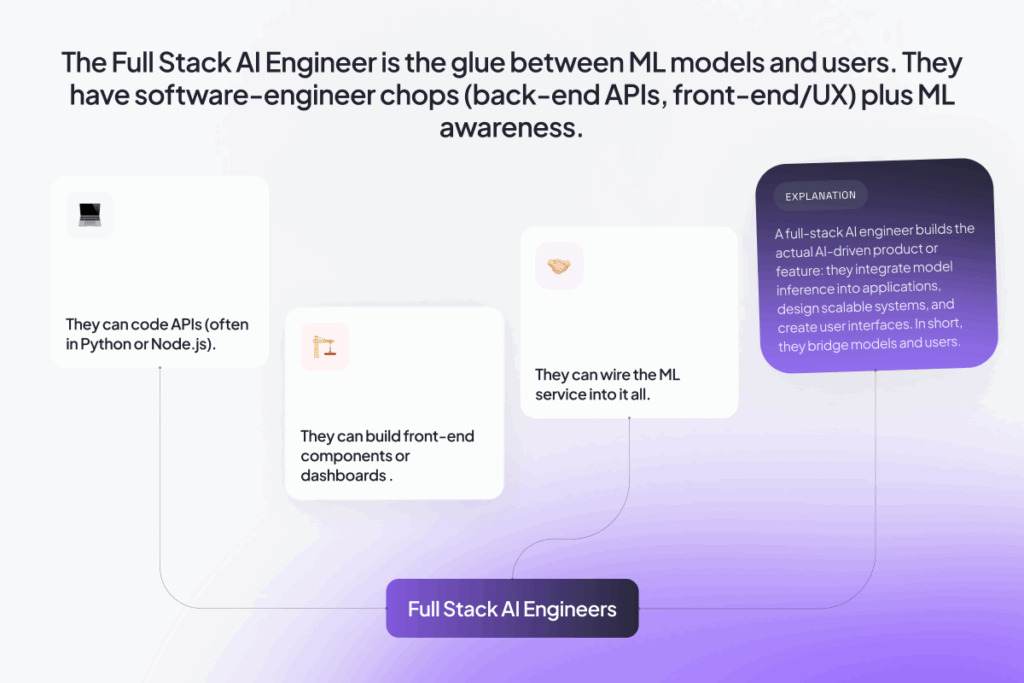

3. Full Stack AI Engineer

The Full Stack AI Engineer is the glue between ML models and users. They have software-engineer chops (back-end APIs, front-end/UX) plus ML awareness. A full-stack AI engineer builds the actual AI-driven product or feature: they integrate model inference into applications, design scalable systems, and create user interfaces. In short, they bridge models and users.

A strong full-stack AI engineer is truly full-stack. They can:

- Code APIs (often in Python or Node.js),

- Build front-end components or dashboards

- Wire the ML service into it all

They know how to consume LLM APIs, handle streaming or asynchronous responses, and store/retrieve embeddings using vector databases like Pinecone or Weaviate. They’re comfortable with containerization (Docker/Kubernetes), cloud platforms (AWS, GCP, Azure) and continuous integration so models run reliably.

Overall, a skilled full-stack AI engineer is key to bridging models and users. They know things like FastAPI or Flask for APIs, React or Angular for UI (since core skills like React and Python still dominate AI projects), and ML toolkits under the hood. They also think about user experience: how the AI feature fits into the product workflow, how to test for latency or unexpected model behavior, and how to ship iterative updates. In essence, they unify product, design, and ML into a working application.

4. MLOps Engineer

MLOps (Machine Learning Operations) engineers ensure your models run smoothly in production. They set up the infrastructure and processes around models: automated training pipelines, model versioning, deployments (on cloud or edge), monitoring, and scaling. In other words, they treat ML like code. Modern MLOps platforms streamline the ML lifecycle by automating model training, deployment, and monitoring—ensuring reproducibility, scalability, and faster time to production.

These engineers know tools like MLflow, Kubeflow, or Sagemaker, and handle tasks such as A/B testing different model versions, detecting data drift or model decay, and triggering retraining jobs. They also manage resources (GPUs, clusters) so models run efficiently.

In short, MLOps/DevOps roles handle everything from CI/CD pipelines for ML to logging and alerting, keeping your AI system reliable. A full-stack AI engineer usually needs to be MLOps-savvy, or you might have dedicated MLOps specialists on larger teams.

5. Research Scientist (Optional)

A Research Scientist with a PhD or advanced academic background is usually essential only when working on cutting-edge AI R&D, such as building custom foundation models or developing entirely new AI algorithms. For most business applications, though, this level of specialization is not necessary.

Today, most companies rely on pre-trained foundation models (like GPT-4 or LLaMA) instead of building models from scratch. As a result, the most effective teams are often made up of application-focused engineers—including ML engineers, full-stack AI developers, MLOps professionals, and human-in-the-loop experts who can fine-tune, adapt, and evaluate models using real-world data.

If your team is working with LLMs or multimodal models, you’ll likely need to integrate high-quality training data, feedback loops, and evaluation tasks. That’s where technical data annotators and AI training specialists become critical. These professionals help structure and validate the data that drives model performance—making them a core part of modern AI teams, even if they’re not writing model architecture from scratch.

Building Applications with Foundation Models

Modern AI products are rarely built by training models from scratch. Instead, they rely on pre-trained foundation models—such as GPT-4, Claude, or open-source alternatives—that offer powerful general-purpose capabilities. These models are then adapted through prompt engineering or fine-tuning and embedded into application logic.

However, the true competitive advantage lies not in the model itself, but in the application layer that surrounds it. As foundation models become increasingly commoditized, the real differentiation comes from how effectively they are integrated into end-user experiences.

This requires a strong focus on application engineering, including:

- Retrieval-augmented generation (RAG): Supplying models with relevant context from internal data using vector databases.

- API orchestration: Coordinating multiple models or services to deliver seamless functionality.

- End-to-end user experience: Designing interfaces and workflows that turn model outputs into real business value.

Delivering on these elements demands a combination of software engineering, product development, and machine learning expertise—as well as a deep understanding of your domain to provide the right data and context for meaningful outcomes.

Prompt Engineering: Role, Skill, or Hype?

Once a buzzword, “prompt engineering” is now widely understood to be a skill, not a standalone role. Early on, some companies hired prompt specialists—often with limited technical backgrounds—to extract better responses from large language models. But the demand for that title has since declined sharply.

Today, effective prompt design is a core competency expected of any AI engineer working with LLMs. That doesn’t mean it’s irrelevant. Strong engineers should know how to design, test, and iterate on prompts—but also how to integrate them into real applications.

In interviews, assess their prompt skills in context: Can they handle model quirks? Can they build reliable systems around LLMs? Prompting alone isn’t enough—it’s just one tool in a broader engineering toolkit.

Get Started with Your AI Team

You don’t need a massive team to start building with AI. In fact, overstaffing early can slow progress and dilute focus. A lean, high-performing squad—typically composed of a data infrastructure engineer, an ML engineer, and a full-stack AI engineer—is often enough to build and validate your first AI-driven feature or product. This compact “AI pod” can move fast, ship iteratively, and demonstrate real impact without unnecessary overhead.

As you scale your AI team, adopting a nearshore model can be a strategic advantage. Nearshoring involves hiring engineering talent from countries within close geographic and time-zone proximity to your own—commonly in Latin America, Canada, and parts of Eastern Europe for U.S.-based companies. Among these, Latin America has become especially popular due to the strong pipeline of technically skilled professionals, high English fluency, and full workday overlap with U.S. time zones.

U.S. tech leaders are increasingly choosing nearshore over offshore to improve collaboration, reduce latency in decision-making, and maintain agile development cycles without sacrificing quality or cultural alignment. With nearshore rates typically 30–40% lower than U.S.-based salaries, companies gain cost efficiency while still accessing senior-level engineers capable of driving complex AI projects.

In short, the nearshore model enables you to scale smartly: build faster, stay lean, and maintain tight feedback loops—without compromising execution.

Build Smarter with BEON.tech

At BEON.tech, we partner with some of the world’s most innovative companies—including several NASDAQ-listed organizations—to build high-impact AI teams. Our network features top-tier engineering talent from across Latin America, carefully vetted for technical excellence, English fluency, and strong product sensibility.

Whether you need a full-stack AI engineer, ML specialist, or data infrastructure expert, we provide pre-screened professionals who integrate seamlessly into your team and timezone. With cost efficiency up to 40% compared to U.S. rates, low turnover, and clear career growth paths, BEON.tech ensures long-term engagement and high performance.

We don’t just fill roles—we help you assemble lean, agile squads that deliver real AI applications, not just experiments.

Ready to build AI that works? Let BEON.tech be your strategic partner in engineering scalable, production-ready AI solutions.

Explore our

Leadership in Software Development: Styles & Skills for Elite Teams

Software development is driven by innovation and collaboration—but these forces don’t flourish on their own. Behind every high-performing engineering team is strong leadership that sets direction, builds alignment, and empowers people to do their best work. In today’s fast-evolving tech landscape, leadership in software development matters more than ever. Teams are scaling fast, becoming more…

From Niche to Norm: What AI Tools Have Top Engineers Incorporated into Their Stack?

It’s no secret that AI tools are reshaping how developers work. From automating boilerplate code to boosting daily productivity, artificial intelligence has evolved from a sidekick to a co-pilot — and in many cases, the pilot itself. The adoption of these tools is rapidly becoming the norm, with usage rates ranging from 70% to 82%…

How Much Does It Cost to Hire a Software Developer? Freelance vs Full-Time Developers

The tech talent market is extremely tight. U.S. demand for developers is soaring: one forecast estimates 7.1 million tech jobs by 2034 (up from 6M in 2023), and 70% of tech workers report multiple job offers. Concurrently, skills like generative AI are in explosive demand (job postings up ~1,800%). As a result, companies struggle to…